AI usability testing with simulated users: meet your new design partner

- Ayesha Salim

- May 3, 2025

- 4 min read

How AI agents can simulate user behaviour to help you test, iterate and improve faster

Today, UI/UX designers rely on usability testing to understand whether a product is actually meeting user needs. But what if you could spot design flaws before running a live study?According to a new paper, you can. UXAgent, an AI-driven tool can offer simulated usability testing through AI Agents to act like real human users. This gives UX designers a way to gather early feedback without the time, cost, and coordination real user studies demand.

But using simulated AI Agents is not the same thing right?

Yes, and the researchers are very clear about this:

Our position is that LLM Agents are not to replace human participants in UX study but rather, to be more responsible to the human participants — LLM Agents can work together with UX researchers (human-AI collaboration) in a simulated pilot study manner to provide the desired early and immediate feedback for UX researchers to further iterate their design before testing with real human participants.

Why traditional usability testing is hard to scale

While usability testing remains crucial to UX, it's often messy, costly and resource-intensive.

Some common challenges include:

little to no early feedback to iterate before a live study

high cost and effort to recruit enough qualified participants

limited access to diverse populations, especially in cross-cultural or international contexts

researcher bias influencing tasks or analysis

recruiting the wrong users or using unsuitable digital assets

These hurdles often mean critical UX issues are discovered too late, after deadlines or budgets are already locked.

UXAgent: early signals without the cost

UXAgent introduces a new way of testing. It simulates pilot studies with AI Agents that "think" and "act" like human users.

The three key components are:

The Persona Generator: creates diverse, demographically varied simulated users

LLM Agent: the AI Agent that navigates and interacts with websites like a human would

Universal Browser Connector: lets the agents interact in real online environments.

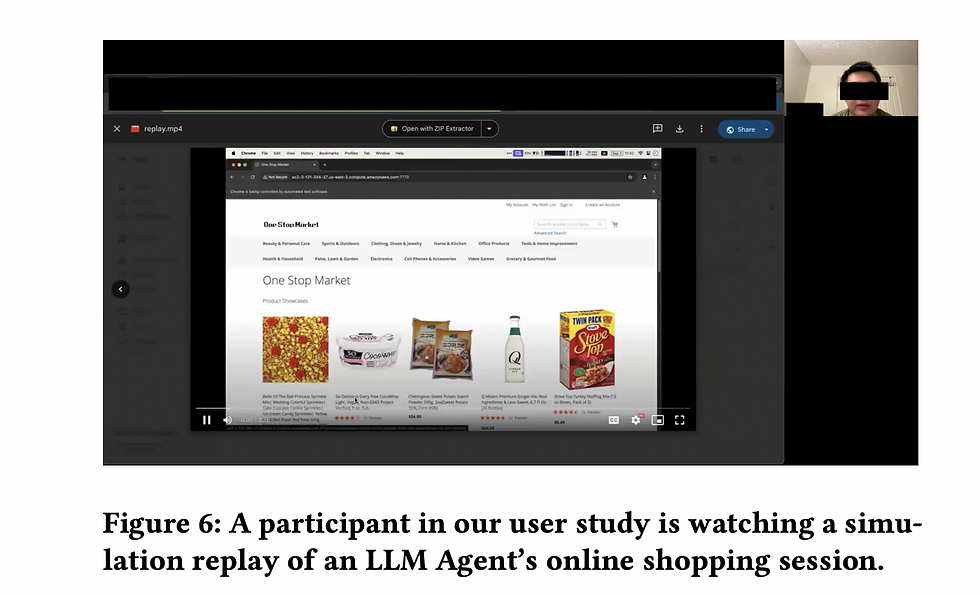

Designers can also watch replay videos of each session or even interview the agents to unpack their reasoning.

So what do these simulated users actually offer?

These aren’t just bots clicking randomly. The agents are designed to mimic human thought processes. Built on a cognitive models, they can simulate goals, attention and decision-making.

At the heart of this is the Reasoning Trace system, which shows what the agent:

observed: "I see a 'Buy Now' button"

planned: "I’ll click to compare prices"

decided: "I’m looking for the cheapest option"

misunderstood: "Im not sure what this link does"

This gives designers a deeper window into where users might get lost or misinterpret content.

Reflection Module: simulated insight, real value

The Reflection Module captures what the agent “learned” and summarises session highlights like:

Product details didn’t help me decide between sizes.

These insights are similar to what you'd expect from post-test interviews, helping assess clarity, confidence, or content issues.

Does it actually work?

Apparently, yes. The researchers simulated a shopping task on WebArena as it works similarly to Amazon.com. They generated 60 diverse agent personas, varying across gender and income levels. Each agent was told to "buy a jacket" and were free to either make a purchase or terminate the session if they couldn't find something.

During each session the system:

recorded the full simulation result (including the agent’s reasoning and action traces)

a video replay of the session

the final outcome (purchase or quit)

Out of the 60 sessions, 45 agents completed a purchase, with an average spend of $57.50.

What did the UX designers think?

Many found the simulation results insightful and trustworthy and expressed interest in digging deeper into the findings. A standout feature was the Agent Interview Interface, which let them talk directly with the simulated users. Some appreciated the ability to "brainstorm". They would have liked to have seen the agent's thoughts being presented in a better way though, "like a highlight or a summary."

The tool also uncovered valuable feedback about the system design itself. For example, one insight revealed that non-binary agents had a lower purchase rate than male and female agents. This led to a hypothesis: maybe product availability was to blame, with too few unisex options.

To verify, the raw action logs were checked and it was noticed that a non-binary user had searched for a “unisex jacket,” landed in the women's section, and eventually abandoned the session. This supported their theory—and gave them a clear, content-driven insight to act on.

One UX researcher reflected:

Since it’s really quick and easy, I think it could encourage people to do a lot more iterative design.

There were mixed feelings about relying on simulated data instead of human participants, but many appreciated the ability to scale studies quickly:

It’s very hard to find pilot study of 60 participants or even just five or ten. So I think in this way I can conduct my pilot study with the large language model agent.

Limitations and ethical considerations

The UX Researchers were concerned about data representation bias:

My feeling is the LLM assumes female agents will buy more, males less.

Others raised alarms about algorithmic decision bias – the UX researchers were worried it could "disturb my study design". They also wondered about the memory streams of the agents - most humans usually quickly skim and disregard unimportant webpage elements like ads or irrelevant results. It was speculated that perhaps the agents get fixated on too much detail which is not as realistic.

Still, the UX researchers enjoyed having access to this data, something that is not readily available with human participants.

Comments