The messy reality of designing AI conversations: why ethical design needs to start at the beginning

- Ayesha Salim

- May 20, 2025

- 4 min read

Updated: May 22, 2025

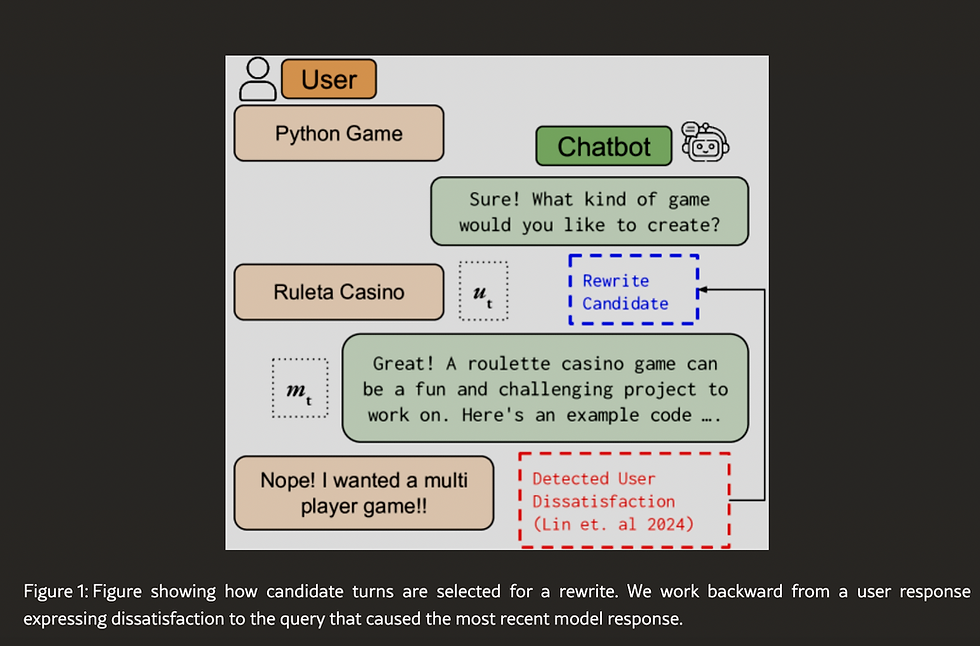

In the era of large language models (LLMs), it can be really tricky to formulate your prompts in a way that conversational AI can comprehend. Even though techniques like prompt rewriting are being developed to enhance responses, this just forms one part of the puzzle.

Most users are not natural prompters as I discovered myself when user testing a parking chatbot for a client. The chatbot, which was intended to assist users in comprehending violation codes and investigating appeal options, caused more annoyance than clarification. The inherent ambiguity of natural language, coupled with an AI's possible lack of real-time context, requires users to be highly precise, often leading to a trial-and-error approach in prompting.

When chatbots don't help users

I experienced this first-hand when doing some user testing on a parking chatbot. These are some of the issues I encountered:

abrupt interactions: the chatbot often disregarded user input, immediately presenting options without clarification

inconsistent experience: some situations allowed clickable selections, while others required specific keywords—creating confusion and unpredictability

weak keyword recognition: simple keywords were frequently misunderstood, leading to frustrating dead ends or system crashes

limited flexibility: no option to undo or retrace steps meant users had to start over if they made an error

insensitive tone: in delicate matters like disability-related appeals, the chatbot’s language felt cold and impersonal, failing to accommodate emotional sensitivity

It was clear that the chatbot didn't seem to know how it wanted to interact with the user, leading to a jarring and unreliable experience. This really showed that understanding human input is absolutely fundamental.

The broader challenge of designing effective and helpful conversational AI systems

My experience with the parking chatbot showed how a set of user experience failures can arise from a poor understanding of natural language input (keyword recognition, lack of context use), inconsistent design flow, and a lack of robust error handling. But there's other issues that users can face in getting a conversational AI to understand them which shows that content/UX designers also need to consider other potential points of failure like functional limitations and even internal safety policies.

The paper "Conversational User-AI Intervention: A Study on Prompt Rewriting for Improved LLM Response Generation,"highlights this. In the study, it was revealed that LLMs that are made to refrain from reacting to improper cues (due to a safety feature) can cause user annoyance. The safety feature will be embedded for good ethical reasons, but it also highlights the conflict between upholding content moderation guidelines and protecting user intent.

LLMs and barriers for vulnerable users

The study also highlights that undesirable LLM behaviour disproportionately affects users with lower English proficiency and lower education levels. This implies that the challenges of interacting with LLMs are unevenly distributed, placing greater obstacles in the way of already vulnerable populations. This has significant implications because it means that for users with lower proficiency or education, navigating an interface that is inconsistent, doesn't understand basic terms, and offers no clear path forward (like the parking chatbot) would be significantly harder.

Future potential: AI Agents as proactive guides

The second paper, “Agentic Workflows for Conversational Human-AI Interaction Design,” takes the conversation further. Instead of fixing broken inputs, it asks: what if the AI helped guide users from the start? The authors suggest using AI agents as “Needfinding Machines”—tools that actively uncover both explicit and latent user needs. This model promotes not just reactive assistance, but proactive understanding. It’s a powerful idea, especially when applied to high-friction, high-stakes interactions like parking appeals.

The paper also addresses:

affordances: making it clear what the AI can and can’t do—something the parking chatbot sorely lacked

contextual data and privacy: users should have control over what data they share, and the risks should be clearly explained.

avoiding overfitting to irrelevant context: too much data can confuse the AI. Thoughtful interaction design is needed to keep conversations on track and relevant

The paper's concept of proactively guiding the user towards clearer input offers a better path than simply attempting to fix vague prompts after the fact. Integrating AI agents throughout the process - rather than just at the final step, proved invaluable. User proxies helped simulate contextual personas, while refinement agents assisted in clarifying objectives. This proactive approach guided users toward expressing their needs with precision, significantly increasing the likelihood of a successful interaction.

What went wrong—and what we should do instead

Reflecting on the parking chatbot through the lens of these two papers, the core problem becomes clear: a failure to design ethically from the beginning

The abruptness and inconsistency weren’t just usability flaws — they eroded trust

The lack of keyword recognition created extra cognitive load, disproportionately impacting those already underserved

The chatbot’s silence when it didn’t know what to say left users stranded, rather than asking clarifying questions or offering help

Ultimately, building ethical conversational AI is not an afterthought or a policy layer added on top. It requires anticipating user needs, designing flows that feel natural and logical - not abrupt or inconsistent.

To build better AI conversations, we need to:

design with empathy: recognise when users are emotional, confused, or vulnerable—and respond with care

create space for clarification: let the AI ask questions when things are unclear, rather than making incorrect assumptions

ensure equity by design: make systems that understand diverse voices, not just technically perfect ones

be transparent: clearly communicate what the system can do, and what it can’t

respect privacy: give users real choices over what information they share and why

Designing truly helpful AI conversations means embedding empathy, clarity, equity, transparency, and privacy into every interaction from the start.

Comments